It's 2025 But Is It 1995 or 1998?

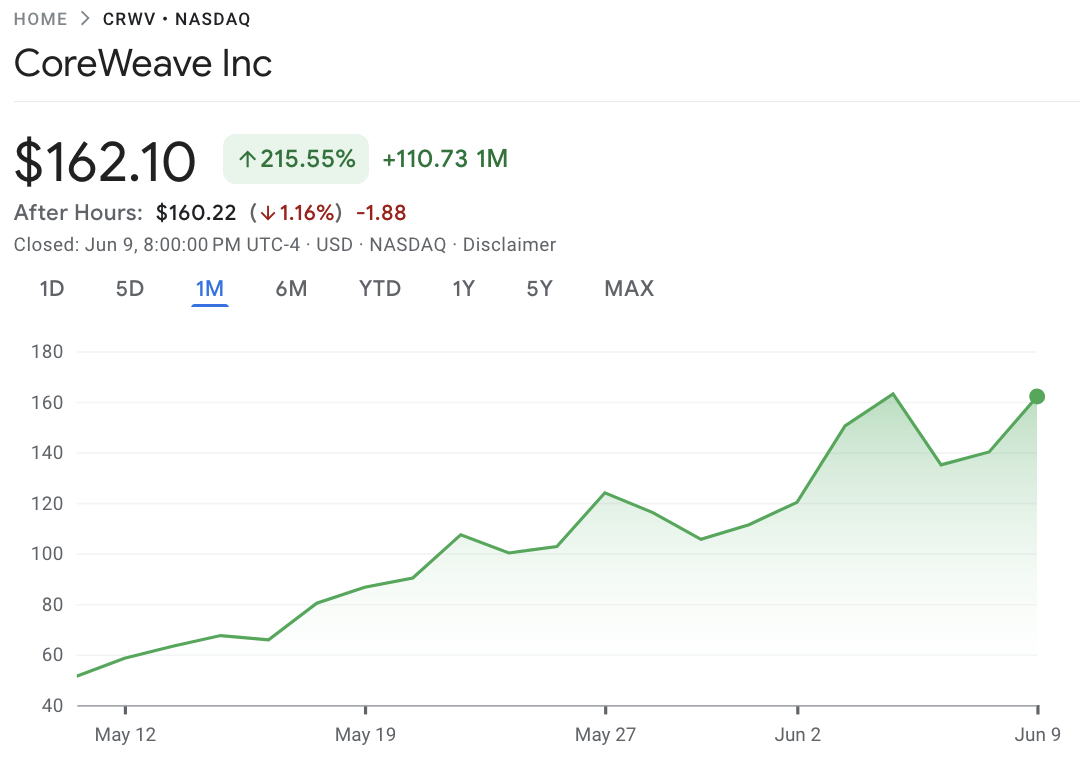

Coreweave chart June 9th 2025

Coreweave chart June 9th 2025

I just watched Coreweave go up 5 or 10% every single day the last several weeks, up 215% in a single month. It’s probably the single highest beta Nvidia play you can put money into now on the public markets. If you are living under a rock they are a major HPC (high performance computing) datacenter company and their revenue growth last year was 420%. I shit you not - you read that right, 77.8 billion market cap, 1 billion in revenue and 400% revenue growth.

The reason I mention this is because another wave of AI frenzy in capital markets has begun.

History is path dependent and the path is set. We are sprinting toward AGI and ASI and the applications are growing every day.

A year ago (maybe more like 18 months ago) it felt like there were a lot of people not really taking this seriously. The phrase “stochastic parrot” was being pejoratively used to describe LLMs. Midjourney was good but you couldn’t really achieve great adherence to your prompt.

Contrast that with today. Here is a video I generated in VEO 3 with an AI voice reading word for word the script I typed in (it’s a commercial for my startup).

By the way that video was a one-shot. It used 20 credits and I think you get 1000 a month for 20 bucks. The high fidelity videos cost 100 credits, so they are about $2 a video.

I’m going to guess VEO4 will output stuff actually good enough to run as ads. Imagine Hollywood spending $1 million per film on AI-generated shots instead of $50 million on handmade CGI.

If at this point you think the demand for tokens is not going to increase exponentially you are fundamentally misunderstanding reality.

By the way, let’s not forget about Studio Ghibli week in April when ChatGPT rolled out their new image model and created a worldwide phenomenon.

Chain of thought & iterative agent demand

When Deepseek R1 was released in January and for the first time we were able to see full chain of thought tokens in a response from a thinking model, this was also a fundamental shift.

The Claude Sonnet 3.5 October 2024 update was a major game changer in the ability to code with AI, but it pales in comparison to chain of thought (COT).

I almost exclusively use COT models to code now, and I find that it produces better output and fewer bugs because they are able to spend a few cycles of tokens double checking things and avoiding obvious mistakes. I am personally burning through millions of tokens per day using COT models for various coding tasks.

But even better now is the fact that “Agent mode” actually works well. You can ask it to do something and it take multiple actions iteratively. Each step is using a COT model so it can make a change, run a linter, run a command, check for errors, run another command, etc.

If the models could reason faster I would probably use this more and be willing to pay more.

Autonomous Agents

There is also a new crop of actually useful autonomous agents. With OpenAI’s Codex product you can now connect your repo directly and give it a command to let it churn through tasks (and tokens), and come back to a ready-made pull request later.

Claude Code & Codex are both headless. But there are other agent products like Anthropic Computer Use, OpenAI Operator, or Manus that control a virtual machine (or your computer) and iteratively screenshot the screen and take human input‑based actions on the computer.

Once these computer‑controlling agent products get more capable the demand here will also be infinite. This is where you are actually going to replace office workers.

Imagine people renting out offices full of computers where a minder walks up and down the aisle of dozens of AI controlled machines doing various tasks and intervening when they get stuck.

Actually, that’s totally unnecessary because it can all be done virtually and you flip between 20 agents to monitor from your own computer, but it’s a useful mental model of what is going on.

Deep research

Another massive token user is deep research. All of the big AI model makers now have “deep research” or “deep search” style features where you give a task to collect information and come back in a few minutes have hundreds of web searches were performed on your behalf.

Actually it’s more useful to understand that all current forms of purely algorithmic web scraping are obsolete compared with how much more you can do with scraping using just a little bit of LLM intelligence in the mix.

AI Audio

I am rolling out an AI voice product to my customers now. This is the first time I felt like the new voice models were actually good, and almost every time I show it to a customer they are impressed. It’s just because the last time they tried it was six to twelve months ago if not longer and the voice models got that good that fast.

Summarizing the big token uses

So what are the heavy applications of AI inference demand at this point?

- Video generation.

- Audio generation.

- COT model usage (in various forms).

- Deep research products.

- Headless Autonomous task runners - Openai Codex, Claude Code,

- Headed task runners - Openai Operator, Anthropic Computer Use, Manus, etc.

- Image generation.

I’m sure I’m missing something, or new major applications will come up. One that will happen eventually is robotics. Example: Figure AI’s Helix model, a Vision-Language-Action (VLA) framework announced on February 19, 2025

The Dot-Com Parallels

So to me, I would argue this is more like 1994 or 1995 than it is 1998. It feels like we are closer to the beginning of these new AI models becoming intertwined into everything we do rather than toward the peak.

There are just so damn many business applications to this stuff.

I’m not sure if the dawn of the internet was like this, but it feels to me like the gulf between the AI‑pilled people who are taking advantage of these new capabilities is widening dramatically versus the people who are ignoring it all.

I’m not sure if it’s different this time because the use cases are more business oriented and less social. To me it is intuitive that social phenomena should always grow faster than those related to productivity. But the winners of productivity advancement should be able to capture massive economic benefits faster than laggards.

I do think it’s correct to worry about malinvestment in general with technology, but what’s particularly interesting is that, relatively speaking, we are now in a high interest rate environment and it doesn’t seem to be deterring capital from flooding into AI and AI infrastructure.

Feel the AGI

I have been very AI pilled since GPT3. So much so that as soon as I was able to, I started a new company to build an AI product. That’s about as much conviction as you can have in my opinion.

But it wasn’t maybe until this week that I really felt how imminent everything was.

The fact that VEO3 just one shotted a video where the character perfectly read off the script was pretty insane to me.

Using OpenAI’s Codex agent for the first time recently also left a really big impression on me. These autonomous agent products are going to be huge.

There will be a whole new proliferation of products around managing agents and queueing tasks, slicing up and dividing work, and coming up with and managing work.

Maybe it will look like how we manage work today but maybe not.

All I can say is this: I used to be a little bearish on coding, and now I’m less so. Rather than needing fewer programmers, I think we will need more. We will be able to automate nearly everything, and the demand for automators/minders/and operators will be infinite.